In what's one of the most inventive hacking efforts, cybercrime groups are presently concealing vindictive code inserts in the metadata of picture records to clandestinely take installment card data entered by guests on the hacked sites. "We discovered skimming code covered up inside the metadata of a picture record (a type of steganography) and secretly stacked by undermined online stores," Malwarebytes analysts said a week ago. "This plan would not be finished without one more fascinating variety to exfiltrate taken Visa information. Indeed, lawbreakers utilized the camouflage of a picture document to gather their plunder." The developing strategy of the activity, generally known as web skimming or a Magecart assault, comes as terrible on-screen characters are finding various approaches to infuse JavaScript contents, including misconfigured AWS S3 information stockpiling containers and abusing content security strategy to transmit information to a Google Analytics account under their influence. Utilizing Steganography to Hide Skimmer Code in EXIF: Relying upon the developing pattern of web based shopping, these assaults ordinarily work by embeddings noxious code into an undermined website, which clandestinely collects and sends client entered information to a cybercriminal's server, hence giving them access to customers' installment data.

In this week-old crusade, the cybersecurity firm found that the skimmer was not just found on an online store running the WooCommerce WordPress module yet was contained in the EXIF (short for Exchangeable Image File Format) metadata for a dubious area's (cddn.site) favicon picture. Each picture comes implanted with data about the picture itself, for example, the camera producer and model, date and time the photograph was taken, the area, goals, and camera settings, among different subtleties. Utilizing this EXIF information, the programmers executed a bit of JavaScript that was covered in the "Copyright" field of the favicon picture. "Similarly as with different skimmers, this one likewise snatches the substance of the info fields where online customers are entering their name, charging address, and Visa subtleties," the scientists said. Beside encoding the caught data utilizing the Base64 position and turning around the yield string, the taken information is transmitted as a picture document to cover the exfiltration procedure. Expressing the activity may be the handicraft of Magecart Group 9, Malwarebytes included the JavaScript code for the skimmer is muddled utilizing the WiseLoop PHP JS Obfuscator library. This isn't the first run through Magecart bunches have utilized pictures as assault vectors to bargain web based business sites. Back in May, a few hacked sites were watched stacking a pernicious favicon on their checkout pages and in this manner supplanting the real online installment structures with a fake substitute that took client card subtleties. Mishandling DNS Protocol to Exfiltrate Data from the Browser :In any case, information taking assaults don't need to be essentially kept to malignant skimmer code.

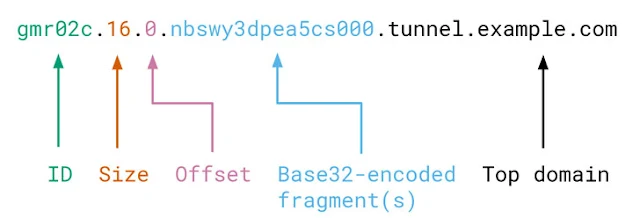

In a different procedure exhibited by Jessie Li, it's conceivable to appropriate information from the program by utilizing dns-prefetch, an inactivity decreasing strategy used to determine DNS queries on cross-starting point spaces before assets (e.g., documents, joins) are mentioned. Called "browsertunnel," the open-source programming comprises of a server that unravels messages sent by the instrument, and a customer side JavaScript library to encode and transmit the messages. The messages themselves are subjective strings encoded in a subdomain of the top area being settled by the program. The device at that point tunes in for DNS inquiries, gathering approaching messages, and interpreting them to extricate the important information. Put in an unexpected way, 'browsertunnel' can be utilized to hoard touchy data as clients do explicit activities on a site page and consequently exfiltrate them to a server by camouflaging it as DNS traffic. "DNS traffic doesn't show up in the program's investigating apparatuses, isn't obstructed by a page's Content Security Policy (CSP), and is regularly not reviewed by corporate firewalls or intermediaries, making it a perfect mode for carrying information in compelled situations," Li said.

Singapore's TraceTogether Tokens are the most recent exertion to handle Covid-19 with tech. Be that as it may, they have likewise reignited a security banter. The wearable gadgets supplement the island's current contact-following application, to recognize individuals who may have been contaminated by the individuals who have tried positive for the infection. All clients need to do is convey one, and the battery endures as long as nine months without requiring a revive - something one master said had "dazed" him. The administration office which built up the gadgets recognizes that the Tokens - and innovation all in all - aren't "a silver shot", yet should enlarge human contact-tracers' endeavors. The first to get the gadgets are a huge number of helpless old individuals who don't possess cell phones. To do as such, they needed to give their national ID and telephone numbers - TraceTogether application clients as of late needed to begin doing similarly. On the off chance that dongle clients test positive for the infection, they need to hand their gadget to the Ministry of Health in light of the fact that - not at all like the application - they can't transmit information over the web.

Human contact-tracers will at that point utilize the logs to recognize and prompt other people who may have been contaminated. "It's exceptionally exhausting in what it does, which is the reason I believe it's a decent plan," says equipment engineer Sean Cross. He was one of four specialists welcomed to examine one of the gadgets before they propelled. The gathering was indicated every one of its segments yet were not permitted to turn it on. "It can relate who you'd been with, who you've tainted and, vitally, who may have contaminated you," Mr Cross includes. Application help Singapore was the primary nation to send a national coronavirus-following application.

The nearby specialists state 2.1 million individuals have downloaded the product, speaking to about 35% of the populace. It is willful for everybody aside from transient laborers living in dormitories, who represent most of Singapore's 44,000 or more contaminations. The administration says the application helped it isolate a few people more rapidly than would have in any case been conceivable. In any case, by its own confirmation, the tech doesn't fill in just as had been trusted. On iPhones, the application must be running in the frontal area for Bluetooth "handshakes" to happen, which implies clients can't utilize their handsets for whatever else. It's likewise an enormous channel on the battery. Android gadgets don't confront a similar issue. Computerized contact-following can in principle be enormously compelling, however just if a huge level of a populace is included. Along these lines, proprietors of Apple's gadgets are probably going to be among others requested to utilize the dongles soon. Security concerns :At the point when the Token was first declared toward the beginning of June, there was an open reaction against the legislature - something that is a moderately uncommon event in Singapore. Wilson Low began an online appeal calling for it to be jettisoned. Just about 54,000 individuals have marked. "All that is preventing the Singapore government from turning into an observation state is the approach and ordering the necessary utilization of such a wearable gadget," the appeal expressed. "What comes next would be laws that express these gadgets must not be killed [and must] stay on an individual consistently - in this way fixing our destiny as a police state." Clergymen call attention to the gadgets don't log GPS area information or interface with portable systems, so can't be utilized for reconnaissance of an individual's developments. Mr Cross concurs that from what he was appeared, the dongles can't be utilized as area trackers. Be that as it may, he includes that the plan is still less security driven than a model advanced by Apple and Google, which is as a rule generally embraced somewhere else. "Toward the day's end, the Ministry of Health can go from this mysterious, mystery number that solitary they know, to a telephone number - to an individual," he clarifies. On the other hand, applications dependent on Apple and Google's model ready clients in the event that they are in danger, yet don't uncover their characters to the specialists. It is dependent upon the people to do so when, for instance, they register for a test.

Dr Michael Veale, an advanced rights master at University College London, cautions of the potential for mission creep. He gives a model where a legislature battling against Covid-19 should uphold isolate control. It could do as such, he says, by fitting Bluetooth sensors to open spaces to recognize dongle clients who are making the rounds when they ought to act naturally disconnecting at home. "You should simply introduce material science framework on the planet and the information that is gathering can be planned back to Singapore ID numbers," he clarifies. "The buildability is the stressing part." Be that as it may, the authority accountable for the organization liable for TraceTogether makes light of such concerns. "There is a high trust connection between the legislature and individuals, and there is information security," says Kok Ping Soon, CEO of GovTech. He includes that he trusts general society perceives that the wellbeing specialists need this information to secure them and their friends and family. Another explanation Singapore lean towards its own plan over Apple and Google's is that it can furnish disease transmission experts with more prominent understanding into a flare-up's spread. This was to a limited extent why the UK government at first opposed embracing the tech monsters' drive until its own push to work around Apple's Bluetooth limitations neglected to get by. In the event that Singapore's wearables fill in as trusted, different countries might be enticed to follow. you can settle on strategy choices which cautiously tie limitations or commitments just to high-hazard exercises. Else you're left with much blunter devices," remarks security master Roland Turner, another individual from the gathering welcomed by Singapore to examine its equipment. "There is maybe a confusing result that more noteworthy opportunities are conceivable."

Early a week ago, bits of gossip that internet business goliath Amazon would purchase a self-sufficient vehicle startup began to surface. Before the week's over, it was a done arrangement. Be that as it may, what is Amazon doing purchasing an independent vehicle startup? As indicated by a Financial Times report, Amazon as of late paid $1.2 billion to get self-driving tech up-and-comer, Zoox. This makes it Amazon's greatest attack into self-driving tech, and one of the organization's greatest acquisitions ever. I should be straightforward here, Zoox isn't one of the new businesses in this field positions high on my radar, so how about we investigate the organization before attempting to make sense of what Amazon is doing. Zoox was shaped in 2014 and is situated in California. The organization was begun with the objective of making a self-sufficient vehicle explicitly for the "robotaxi" showcase. Consider them expected contenders to any semblance of Alphabet's (previously Google's) Waymo, Yandex, and Uber's self-driving division. What makes Zoox one of a kind however, is that the organization is building up its own vehicle as well. Most self-driving organizations center around creating programming and sensors to retrofit to existing vehicles. Right now the organization has been retrofitting Toyotas, however with plans to uncover its introduction vehicle in the not so distant future. After some grievous cutbacks due to coronavirus, Zoox now has around 900 staff taking a shot at its self-driving tech. Until now, the organization has apparently brought around $1 billion up in startup capital, and two or three years prior was esteemed at over $3 billion. It would seem that Amazon may have somewhat of a decent arrangement here, or constantly underestimated Zoox, yet how can it hope to get its cash back? In its official declaration of the securing, Amazon said that it's purchased the startup to help Zoox understand its fantasy. I question that is the genuine explanation, organizations don't accepting different organizations just to get them out. Amazon could have contributed, however no, it purchased the organization. In addition, Zoox, an organization that is had a past valuation of multiple times what Amazon paid, acknowledged the arrangement. Something lets me know there's more going on here than we've been told. What precisely however, stays muddled. The web based business goliath has just dunked its toe in the apply autonomy and electric vehicle world, however not both simultaneously. In 2012, Amazon purchased Kiva Systems, an organization that made stockroom robots for moving merchandise around business structures. In February a year ago, Amazon put around $440 million into EV startup Rivian to fabricate an armada of practical electric conveyance vans.

Amazon doesn't generally have a lot to pick up on its current plan of action by building a robot taxi division. Many have really scrutinized the plan of action of robotaxis, asserting that they're not really going to be that gainful contrasted with normal human-driven taxicabs. In the last quarter of 2019, Amazon spent almost multiple times ($9.6 billion) what it spent on Zoox just on conveying merchandise to clients. That makes its obtaining of Zoox appear as though pocket change in examination. For Amazon, an online business organization, the genuine cash to be made is in conveyance frameworks. Or then again rather having the option to convey more, with less human inclusion, since people need rest, robots don't. Rather, the organization could and ought to investigate self-sufficient tech for conveyances. Envision the potential if Amazon could get Rivian, Kiva (presently Amazon Robotics), and Zoox in a room together. Rivian is creating one of the most long awaited electric trucks, the R1T. Be that as it may, as per various reports, Zoox's invasion into vehicle improvement has left a great deal to be wanted. Maybe the more drawn out term desire is that Amazon can tie all these specialty units together, to make one sound supportable and self-governing conveyance division.

Think more along the lines of Nuro's agonizingly charming driverless conveyance robot, and I believe we're getting some place. In any case, Amazon said that it will leave Zoox to its own gadgets to keep on building its own vehicle and self-driving tech. In any case, if Amazon needs to bring in its cash back, it ought to get Zoox dealing with creating conveyance robots to cut that huge transportation bill. Amazon has all the bits of the riddle, it simply needs to assemble them.

Man-made brainpower has collected some terrible notoriety throughout the years. For a few, the term AI has gotten equal with the mass joblessness, mass servitude, and mass eradication of people by robots.

For other people, AI frequently summons tragic pictures of Terminator, The Matrix, Hal 9000 from 2001: A Space Odyssey, and cautioning tweets from Elon Musk.

However, numerous specialists accept that those understandings don't do equity to one of the advancements that will have a great deal of positive effect on human life and society. Increased insight (AI), additionally alluded to as knowledge expansion (IA) and intellectual enlargement, is a supplement—not a substitution—to human insight. It's tied in with helping people become quicker and more astute at the errands they're performing. At its center, enlarged knowledge isn't in fact not the same as what's as of now being introduced as AI. It is a somewhat alternate point of view on innovative advances, particularly those that permit PCs and programming to take an interest in errands that were believed to be elite to people. What's more, however some may consider it a promoting term and an alternate method to reestablish publicity in a previously advertised industry, I think it'll assist us with bettering comprehend an innovation whose limits its own makers can't characterize. What's up with AI (man-made brainpower)? The issue with man-made reasoning is that it's unclear. Fake methods trade for common. So when you state "man-made consciousness," it as of now insinuates something that is comparable to for human insight. This definition alone is sufficient to cause dread and frenzy about how AI will influence business and life itself. For the occasion, those worries are to a great extent lost. Genuine man-made consciousness, otherwise called general and super AI, which can reason and choose as people do is still at any rate decades away. Some think making general AI is a unimportant journey and something we shouldn't seek after by and large. What we have right presently is tight AI, or AI that is productive at playing out a solitary or a constrained arrangement of assignments. To be genuine, mechanical advances in AI do cause difficulties, however perhaps not the ones that are as a rule so enhanced and frequently talked about. Likewise with each mechanical upheaval, occupations will be uprooted, and possibly in greater extents than past emphasess.

For example, self-driving trucks, one of the most refered to models, will affect the employments of a huge number of truck drivers. Different occupations may vanish, similarly as the industrialization of horticulture significantly diminished the quantity of human workers working in manors and ranches. Be that as it may, that doesn't imply that people will be rendered outdated because of AI getting predominant. There are many human abilities that out and out human-level insight (in the event that it is ever made) can reproduce. For example, even insignificant assignments, for example, getting things with various shapes and putting them in a container, an errand that a four-year-old youngster can perform, is an amazingly confounded undertaking from AI point of view. Actually, I accept (and I will expound on this in a future post—stay tuned) that AI will empower us to concentrate on what makes us human as opposed to investing our energy doing exhausting things that robots can accomplish for us. What's directly with AI (enlarged knowledge)? At the point when we take a gander at AI from the expanded insight viewpoint, many intriguing open doors emerge. People are confronting a major test, one that they themselves have made. On account of advances in the fields of distributed computing and versatility, we are creating and putting away colossal measures of information. This can be basic things, for example, how much time guests spend on a site and what pages they go to. In any case, it can likewise be progressively valuable and basic data, for example, wellbeing, climate and traffic information. Because of keen sensor innovation, the web of things (IoT) , and universal network, we can gather and store data from the physical world such that was already unimaginable. In these information stores lie incredible chances to diminish clog in urban communities, recognize indications of malignant growth at prior stages, help out understudies who are lingering behind in their courses, find and forestall cyberattacks before they bargain their harm, and considerably more. Be that as it may, the issue is, glancing through this information and finding those insider facts is past human limit. As it occurs, this is actually where AI (enlarged knowledge), and AI specifically, can support human specialists. Computer based intelligence is especially acceptable at dissecting gigantic reams of information and discovering examples and relationships that would either go unnoticed to human experts, or would take quite a while. For example, in medicinal services, an AI calculation can break down a patient's side effects and crucial signs, contrast it and the historical backdrop of the patient, that of her family and those of the a huge number of different patients it has coming up, and assist her with doctoring by giving recommendations of what the causes may be.

And the entirety of that should be possible very quickly or less. Moreover, AI calculations can look at radiology pictures many occasions quicker than people, and they can help human specialists in assisting more patients. In training, AI can support the two educators and students. For example, AI calculations can screen understudies responses and connections during an exercise and contrast the information and authentic information they've gathered from a great many different understudies.

And afterward they can discover where those understudies are conceivably slacking, where they are performing admirably. For the instructor, AI will give criticism on all of their understudies that would already require one-on-one coaching. This implies instructors will have the option to utilize their time and spend it where they can have the most effect on their understudies. For the understudies, AI aides can assist them with improving their learning abilities by furnishing them with reciprocal material and activities that will assist them with filling in the holes in regions where they are slacking or will possibly confront difficulties later on. As these models and a lot more show, AI isn't tied in with supplanting human knowledge, yet it's fairly about enhancing or expanding it by empowering us people to utilize the downpour of information we're creating. (I for one think insight growth or intensification is an increasingly reasonable term. It utilizes an abbreviation (IA) that can't be mistaken for AI, and it better depicts the usefulness of AI and other comparable advances. Expanded knowledge alludes to the aftereffect of joining human and machine insight while insight enhancement alludes to what usefulness these innovations give.) All things considered, as I said previously, we ought not excuse the difficulties that AI represents, the ones referenced here just as the ones I've talked about in past posts, for example, protection and predisposition.

In any case, rather than dreading computerized reasoning, we should grasp enlarged insight and discover ways we can utilize it to mitigate those feelings of trepidation and address the difficulties that lie ahead.

The world's largest web page for software program builders is forsaking decades-old coding phrases to cast off references to slavery, such as grasp and slave.GitHub Chief Executive Nat Friedman said the company is working on altering the time period "master" - for the predominant model of code - to a impartial term.The firm, owned through Microsoft, is used by way of 50 million builders to shop and replace its coding projects.This is the ultra-modern in a marketing campaign to put off such phrases from software program jargon.

The master-slave relationship in science generally refers to a machine the place one - the grasp - controls different copies, or processes.The years-old marketing campaign to exchange such phrases has been given clean impetus amid the resurgence of Black Lives Matter protests in the United States.Mr Friedman's announcement got here in a Twitter reply to Google Chrome developer Una Kravets, who stated she would be glad to rename the "master" department of the undertaking to "main"."If it prevents even a single black man or woman from feeling extra remoted in the tech community, feels like a no-brainer to me," she wrote.GitHub customers can already nominate something phrases they pick out for the a range of variations and branches of a project.But the alternate to the default terminology is possibly to have a sizeable affect on the massive wide variety of man or woman tasks hosted on the platform.Blacklists and masters

In latest years, countless fundamental tasks have tried to go away from such language, preferring phrases like "replicas" or comparable phrases over "slaves", even though the phrases proceed to be generally understood and used.Other phrases are additionally being revised.For example, Google's Chromium internet browser task and Android running machine have each prompted builders to keep away from the usage of the phrases "blacklist" and "whitelist" for directories of these matters that are explicitly banned or allowed.Chromium's documentation rather calls for "racially neutral" language, due to the fact "terms such as 'blacklist' and 'whitelist' improve the thought that black=bad and white=good."It suggests the use of "blocklist" and "allowlist" instead.But such strikes have no longer been barring controversy. Critics spotlight that the phrase "master" is now not usually used in a racially charged way.Rather, in software program development, it is used in the identical way as in audio recording - a "master" from which all copies are made. Others have raised issues about compatibility or ease of understanding, if a range of phrases are used.But no matter the cutting-edge resurgence, such arguments are no longer new: in 2003, Los Angeles County required hardware suppliers no longer to use the "unacceptable" phrases and to discover alternatives.

Is my automobile hallucinating? Is the algorithm that runs the police surveillance device in my town paranoid? Marvin the android in Douglas Adams’s Hitchhikers Guide to the Galaxy had a ache in all the diodes down his left-hand side. Is that how my toaster feels?This all sounds ludicrous till we comprehend that our algorithms are an increasing number of being made in our personal image. As we’ve realized extra about our personal brains, we’ve enlisted that understanding to create algorithmic variations of ourselves.

These algorithms manipulate the speeds of driverless cars, discover aims for self reliant navy drones, compute our susceptibility to business and political advertising, locate our soulmates in on-line relationship services, and consider our insurance plan and credit score risks. Algorithms are turning into the near-sentient backdrop of our lives.The most famous algorithms presently being put into the group of workers are deep mastering algorithms. These algorithms reflect the structure of human brains via constructing complicated representations of information.

They study to recognize environments via experiencing them, discover what appears to matter, and discern out what predicts what. Being like our brains, these algorithms are an increasing number of at chance of intellectual fitness problems.Deep Blue, the algorithm that beat the world chess champion Garry Kasparov in 1997, did so via brute force, inspecting hundreds of thousands of positions a second, up to 20 strikes in the future. Anyone may want to apprehend how it labored even if they couldn’t do it themselves.

AlphaGo, the deep gaining knowledge of algorithm that beat Lee Sedol at the recreation of Go in 2016, is essentially different.

Using deep neural networks, it created its personal appreciation of the game, viewed to be the most complicated of board games. AlphaGo discovered through looking at others and by way of taking part in itself. Computer scientists and Go gamers alike are befuddled by way of AlphaGo’s unorthodox play. Its method appears at first to be awkward.

Only in retrospect do we recognize what AlphaGo was once thinking, and even then it’s no longer all that clear.To provide you a higher perception of what I imply by using thinking, think about this. Programs such as Deep Blue can have a computer virus in their programming. They can crash from reminiscence overload.

They can enter a country of paralysis due to a neverending loop or surely spit out the incorrect reply on a search for table. But all of these troubles are solvable by means of a programmer with get right of entry to to the supply code, the code in which the algorithm used to be written.Algorithms such as AlphaGo are totally different. Their issues are now not obvious with the aid of searching at their supply code. They are embedded in the way that they signify information. That illustration is an ever-changing high-dimensional space, a great deal like strolling round in a dream. Solving issues there requires nothing much less than a psychotherapist for algorithms.Take the case of driverless cars. A driverless auto that sees its first give up signal in the actual world will have already considered thousands and thousands of quit symptoms for the duration of education when it constructed up its intellectual illustration of what a cease signal is.

Under a range of mild conditions, in accurate climate and bad, with and except bullet holes, the quit symptoms it used to be uncovered to include a bewildering range of information.

Under most regular conditions, the driverless auto will apprehend a give up signal for what it is. But no longer all prerequisites are normal. Some latest demonstrations have proven that a few black stickers on a give up signal can idiot the algorithm into wondering that the end signal is a 60 mph sign. Subjected to some thing frighteningly comparable to the high-contrast color of a tree, the algorithm hallucinates.

How many special approaches can the algorithm hallucinate? To locate out, we would have to supply the algorithm with all feasible mixtures of enter stimuli. This ability that there are doubtlessly endless methods in which it can go wrong. Crackerjack programmers already understand this, and take gain of it with the aid of developing what are known as adversarial examples.

The AI lookup team LabSix at the Massachusetts Institute of Technology has proven that, via supplying photographs to Google’s image-classifying algorithm and the usage of the statistics it sends back, they can discover the algorithm’s vulnerable spots.

They can then do matters comparable to fooling Google’s image-recognition software program into believing that an X-rated photograph is simply a couple of domestic dogs enjoying in the grass.Algorithms additionally make errors due to the fact they select up on aspects of the surroundings that are correlated with outcomes, even when there is no causal relationship between them. In the algorithmic world, this is referred to as overfitting. When this occurs in a brain, we name it superstition.The largest algorithmic failure due to superstition that we comprehend of so a ways is known as the parable of Google Flu. Google Flu used what humans kind into Google to predict the region and depth of influenza outbreaks.

Google Flu’s predictions labored exceptional at first, however they grew worse over time till eventually, it was once predicting twice the variety of instances as have been submitted to the US Centers for Disease Control. Like an algorithmic witchdoctor, Google Flu was once clearly paying interest to the incorrect things.Algorithmic pathologies would possibly be fixable. But in practice, algorithms are frequently proprietary black bins whose updating is commercially protected. Cathy O’Neil’s Weapons of Math Destruction (2016) describes a veritable freakshow of business algorithms whose insidious pathologies play out jointly to destroy peoples’ lives.

The algorithmic faultline that separates the rich from the bad is mainly compelling. Poorer human beings are greater probably to have terrible credit, to stay in high-crime areas, and to be surrounded via different negative human beings with comparable problems.

Because of this, algorithms goal these folks for deceptive commercials that prey on their desperation, provide them subprime loans, and ship greater police to their neighborhoods, growing the possibility that they will be stopped by way of police for crimes dedicated at comparable quotes in wealthier neighborhoods.

Algorithms used through the judicial machine provide these men and women longer jail sentences, decrease their possibilities for parole, block them from jobs, enlarge their loan rates, demand greater premiums for insurance, and so on.This algorithmic loss of life spiral is hidden in nesting dolls of black boxes: black-box algorithms that cover their processing in high-dimensional ideas that we can’t get entry to are in addition hidden in black packing containers of proprietary ownership.

This has precipitated some places, such as New York City, to suggest legal guidelines implementing the monitoring of equity in algorithms used via municipal services. But if we can’t realize bias in ourselves, why would we count on to become aware of it in our algorithms?By coaching algorithms on human data, they study our biases. One latest find out about led via Aylin Caliskan at Princeton University discovered that algorithms educated on the news realized racial and gender biases in reality overnight.

As Caliskan noted: ‘Many human beings assume machines are no longer biased. But machines are skilled on human data. And people are biased.’Social media is a writhing nest of human bias and hatred. Algorithms that spend time on social media websites hastily come to be bigots. These algorithms are biased in opposition to male nurses and woman engineers.

They will view problems such as immigration and minority rights in approaches that don’t stand up to investigation. Given 1/2 a chance, we ought to assume algorithms to deal with human beings as unfairly as human beings deal with every other. But algorithms are through building overconfident, with no experience of their very own infallibility. Unless they are educated to do so, they have no purpose to query their incompetence (much like people).For the algorithms I’ve described above, their mental-health issues come from the satisfactory of the facts they are skilled on. But algorithms can additionally have mental-health troubles primarily based on the way they are built.

They can neglect older matters when they analyze new information. Imagine getting to know a new co-worker’s title and all at once forgetting the place you live.

In the extreme, algorithms can go through from what is referred to as catastrophic forgetting, the place the complete algorithm can no longer research or be aware anything.

A idea of human age-related cognitive decline is based totally on a comparable idea: when reminiscence will become overpopulated, brains and laptop computer systems alike require greater time to locate what they know.When matters end up pathological is regularly a count of opinion. As a result, intellectual anomalies in human beings automatically go undetected. Synaesthetes such as my daughter, who perceives written letters as colors, regularly don’t recognise that they have a perceptual present till they’re in their teens.

Evidence-based on Ronald Reagan’s speech patterns now suggests that he in all likelihood had dementia whilst in workplace as US president. And The Guardian reviews that the mass shootings that have happened each and every 9 out of 10 days for roughly the previous 5 years in the US are frequently perpetrated by means of so-called ‘normal’ human beings who appear to spoil beneath emotions of persecution and depression.In many cases, it takes repeated malfunctioning to notice a problem. Diagnosis of schizophrenia requires at least one month of pretty debilitating symptoms.

Antisocial character disorder, the cutting-edge time period for psychopathy and sociopathy, can't be recognized in humans till they are 18, and then solely if there is a records of habits issues earlier than the age of 15.There are no biomarkers for most mental-health disorders, simply like there are no bugs in the code for AlphaGo. The trouble is now not seen in our hardware. It’s in our software.

The many methods our minds go incorrect to make every mental-health hassle special unto itself. We sort them into large classes such as schizophrenia and Asperger’s syndrome, however most are spectrum issues that cowl signs and symptoms we all share to exclusive degrees. In 2006, the psychologists Matthew Keller and Geoffrey Miller argued that this is an inevitable property of the way that brains are built.There is a lot that can go incorrect in minds such as ours. Carl Jung as soon as advised that in each sane man hides a lunatic. As our algorithms end up greater like ourselves, it is getting less complicated to hide.